Gradient Descent Optimizer

Gradient Descent:

Gradient Descent is an iterative optimization algorithm, used to find the minimum value for a function. The general idea is to initialize the parameters to random values, and then take small steps in the direction of the “slope” at each iteration. Gradient descent is highly used in supervised learning to minimize the error function and find the optimal values for the parameters. Various extensions have been designed for the gradient descent algorithms. Some of them are discussed below:

Momentum method:

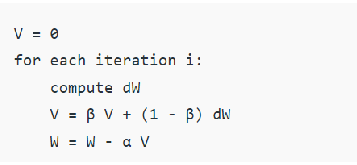

This method is used to accelerate the gradient descent algorithm by taking into consideration the exponentially weighted average of the gradients. Using averages makes the algorithm converge towards the minima in a faster way, as the gradients towards the uncommon directions are canceled out. The pseudocode for the momentum method is given below.

V and dW are analogous to velocity and acceleration respectively. α is the learning rate, and β is analogous to momentum normally kept at 0.9. Physics interpretation is that the velocity of a ball rolling downhill builds up momentum according to the direction of slope(gradient) of the hill and therefore helps in better arrival of the ball at a minimum value (in our case – at a minimum loss).

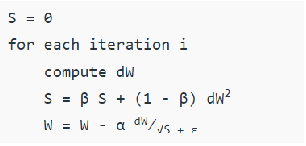

RMSprop: RMSprop was proposed by the University of Toronto’s Geoffrey Hinton. The intuition is to apply an exponentially weighted average method to the second moment of the gradients (dW 2 ). The pseudocode for this is as follows:

About the Author

Silan Software is one of the India's leading provider of offline & online training for Java, Python, AI (Machine Learning, Deep Learning), Data Science, Software Development & many more emerging Technologies.

We provide Academic Training || Industrial Training || Corporate Training || Internship || Java || Python || AI using Python || Data Science etc